Oracle has released the first Database Proactive Bundle Patch (12.2.0.1.170516) on May 16th 2017 for Oracle database 12.2. Unfortunately, I could not successfully apply the patch to my lab environment. It would patch CDB$ROOT and PDB$SEED but failed patching my PDB (see lines 34 to 36).

$ ./datapatch -verbose

SQL Patching tool version 12.2.0.1.0 Production on Sat May 20 22:49:57 2017

Copyright (c) 2012, 2017, Oracle. All rights reserved.

Log file for this invocation: /u01/app/oracle/cfgtoollogs/sqlpatch/sqlpatch_4836_2017_05_20_22_49_57/sqlpatch_invocation.log

Connecting to database...OK

Note: Datapatch will only apply or rollback SQL fixes for PDBs

that are in an open state, no patches will be applied to closed PDBs.

Please refer to Note: Datapatch: Database 12c Post Patch SQL Automation

(Doc ID 1585822.1)

Bootstrapping registry and package to current versions...done

Determining current state...done

Current state of SQL patches:

Bundle series DBBP:

ID 170516 in the binary registry and not installed in any PDB

Adding patches to installation queue and performing prereq checks...

Installation queue:

For the following PDBs: CDB$ROOT PDB$SEED PDBDEV1

Nothing to roll back

The following patches will be applied:

25862693 (DATABASE BUNDLE PATCH 12.2.0.1.170516)

Installing patches...

Patch installation complete. Total patches installed: 3

Validating logfiles...

Patch 25862693 apply (pdb CDB$ROOT): SUCCESS

logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/25862693/21259009/25862693_apply_DEV1_CDBROOT_2017May20_22_50_22.log (no errors)

Patch 25862693 apply (pdb PDB$SEED): SUCCESS

logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/25862693/21259009/25862693_apply_DEV1_PDBSEED_2017May20_22_50_47.log (no errors)

Patch 25862693 apply (pdb PDBDEV1): WITH ERRORS

logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/25862693/21259009/25862693_apply_DEV1_PDBDEV1_2017May20_22_50_47.log (errors)

Error at line 32: ORA-65254: invalid path specified for the directory

Please refer to MOS Note 1609718.1 and/or the invocation log

/u01/app/oracle/cfgtoollogs/sqlpatch/sqlpatch_4836_2017_05_20_22_49_57/sqlpatch_invocation.log

for information on how to resolve the above errors.

SQL Patching tool complete on Sat May 20 22:51:10 2017

Checking the logfile it showed that a call to “dbms_sqlpatch.patch_initialize” had failed. Looking at what might have caused this error in the procedure I found this:

DIRECTORY_SQL := 'CREATE DIRECTORY dbms_sqlpatch_dir AS ' ||

DBMS_ASSERT.ENQUOTE_LITERAL(ORACLE_HOME || '/rdbms/admin');

DEBUG_PUT_LINE('directory sql: ' || DIRECTORY_SQL);

EXECUTE IMMEDIATE DIRECTORY_SQL;

Why would that fail? The code should actually work. After some further investigation I noticed a sneaky little behavior change in Oracle 12.2. If you create a PDB with the “PATH_PREFIX” clause “CREATE DIRECTORY” will no longer accept absolute path names in that PDB.

SQL> alter session set container=pdbdev1; Session altered. SQL> create or replace directory testdir AS '/u02/oradata/DEV1/PDBDEV1/dump'; create or replace directory testdir AS '/u02/oradata/DEV1/PDBDEV1/dump' * ERROR at line 1: ORA-65254: invalid path specified for the directory SQL> create or replace directory testdir AS './dump'; Directory created. SQL> select directory_path from dba_directories where directory_name = 'TESTDIR'; DIRECTORY_PATH -------------------------------------------------------------------------------- /u02/oradata/DEV1/PDBDEV1/./dump SQL> create or replace directory testdir AS 'dump'; Directory created. SQL> select directory_path from dba_directories where directory_name = 'TESTDIR'; DIRECTORY_PATH -------------------------------------------------------------------------------- /u02/oradata/DEV1/PDBDEV1/dump

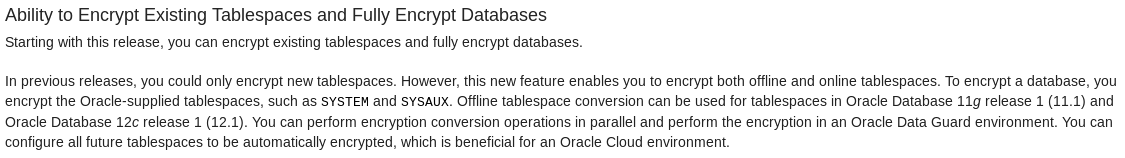

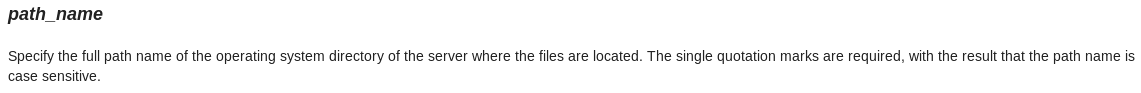

Oracle 12.2 will basically accept any string that doesn’t start with a forward-slash “/” and append it to the PATH_PREFIX. This behavior does not reflect the current documentation:

Oracle 12.1, on the other hand, will accept just any string. But as you can see only the first directory object ends up with the inteded directory path.

SQL> create or replace directory testdir AS '/u02/oradata/DEV1/PDB1'; Directory created. SQL> select directory_path from dba_directories where directory_name = 'TESTDIR'; DIRECTORY_PATH -------------------------------------------------------------------------------- /u02/oradata/DEV1/PDB1 SQL> create or replace directory testdir AS './dump'; Directory created. SQL> select directory_path from dba_directories where directory_name = 'TESTDIR'; DIRECTORY_PATH -------------------------------------------------------------------------------- ./dump SQL> create or replace directory testdir AS 'dump'; Directory created. SQL> select directory_path from dba_directories where directory_name = 'TESTDIR'; DIRECTORY_PATH -------------------------------------------------------------------------------- dump

I believe the intention of Oracle was to restrict the directory object path to “PATH_PREFIX” and subfolders in 12.2. They just did it in a very bad way. I’m going to raise a Service Request to see if that’s expected behavior or a regression and will keep you posted in the comment section of this post. Either way, Oracle will have to fix one or the other.