Today, the good news is that I have time to write this blog post. The less good news is that our basement got flooded and I have to stay home. The bad news is, my current client does not allow remote work 🙁

So, blog post it is…

There are a number of reasons why a SQL Plan Baseline might not get used. Here’s one I was not fully aware of until recently (although it makes perfect sense when you think about it): ALTER SESSION FORCE PARALLEL QUERY [PARALLEL n].

In the simplest of cases the outcome whether a SQL Plan Baseline is used depends on the following:

- PARALLEL_DEGREE_POLICY parameter

- table decoration (DEGREE)

- optimizer enviroment used to generate the plan baseline (ENABLE PARALLEL / FORCE PARALLEL / FORCE PARALLEL n)

- optimizer environment of the session executing the query (ENABLE PARALLEL / FORCE PARALLEL / FORCE PARALLEL n)

- plan in the baseline (serial or parallel plan?)

The base of the test case is a simple table that I’m going to select:

create table t1 as select rownum id, 'ABC' text from dual connect by level <= 100 ;

For every combination of interest we run through the following procedure:

1) open and configure a new session for the parse environment on which the plan baseline is based

2) run this query “select * from t1”

3) create a fixed plan baseline for the generated plan

4) re-connect and configure the parse environment for the executing session

5) run query from step 2) and collect cursor information

do steps 4) and 5) for ENABLE PARALLEL, FORCE PARALLEL, and FORCE PARALLEL n

Test 1: object DEGREE 1, parallel_degree_policy = manual

/ executing session |

enable (serial plan) | force (parallel plan) | force 4 (parallel plan) |

|---|---|---|---|

| enable (default) | used | not used (*3) | not used (*3) |

| force | not used (*1) | used | used |

| force 4 | not used (*2) | used | used |

*1) Degree of Parallelism is 8 because of table property

*2) Degree of Parallelism is 4 because of session

*3) No note in the plan about DOP or baseline

Summary for test 1:

If you have a serial plan in the baseline and use any force parallel on the session the plan baseline is not used and you get a parallel plan.

If you have a parallel plan in the baseline and run the query on a session with ENABLE PARALLEL QUERY (default settings) the plan baseline is not used and you get a serial plan.

Test 2: object DEGREE 1, parallel_degree_policy = limited

/ executing session |

enable (serial plan) | force (parallel plan) | force 4 (parallel plan) |

|---|---|---|---|

| enable (default) | used | used | used (*3) |

| force | not used (*1) | used | used (*3) |

| force 4 | not used (*2) | used | used |

*1) automatic DOP: Computed Degree of Parallelism is 2

*2) Degree of Parallelism is 4 because of session

*3) Degree of Parallelism is 2 because of hint

Summary for test 2:

If you have a serial plan in the baseline and use any force parallel on the session the plan baseline is not used and you get a parallel plan.

Now that we allow for auto DOP the session with ENABLE PARALLEL QUERY can use parallel plans in plan baselines.

Test 3: object DEGREE DEFAULT, parallel_degree_policy = limited

/ executing session |

enable (serial plan (*4)) | force (parallel plan) | force 4 (parallel plan) |

|---|---|---|---|

| enable (default) | used | used | used (*3) |

| force | not used (*1) | used | used (*3) |

| force 4 | not used (*2) | used | used |

*1) automatic DOP: Computed Degree of Parallelism is 2

*2) Degree of Parallelism is 4 because of session

*3) Interestingly, there is no note about DOP in the plan at all. But it uses the plan baseline.

*4) automatic DOP: Computed Degree of Parallelism is 1 because of parallel threshold

Summary for test 3:

If you have a serial plan in the baseline and use any force parallel on the session the plan baseline is not used and you get a parallel plan.

Again, as we allow for auto DOP the session with ENABLE PARALLEL QUERY can use parallel plans in plan baselines. The result is the same as Test 2 but some the notes in the plans differ.

Test 4: object DEGREE DEFAULT, parallel_degree_policy = limited, fake stats so DOP > 1 for all plan baselines

/ executing session |

enable (parallel plan (*1)) | force (parallel plan) | force 4 (parallel plan) |

|---|---|---|---|

| enable (default) | used | used | used |

| force | used | used | used |

| force 4 | used | used | used |

*1) automatic DOP: Computed Degree of Parallelism is 2

Summary for test 4:

Naturally, now that we always have parallel plans in the plan baselines and the object statistics call for auto DOP > 1 the plan baselines get used in all cases.

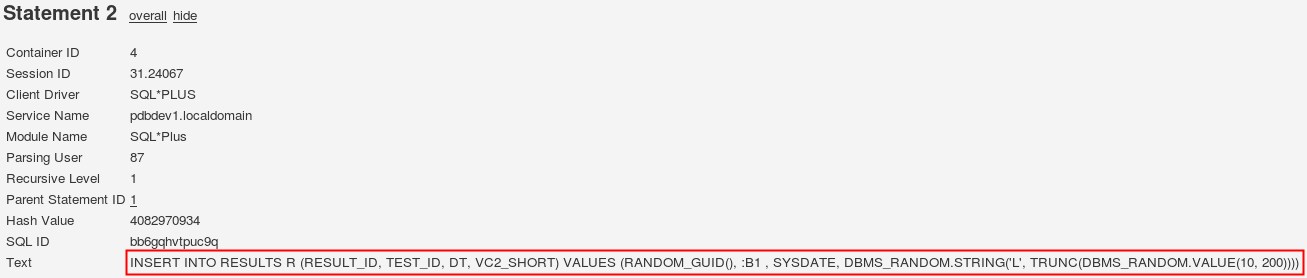

Why did I do this? See, there’s this batch job with a SQL that has a SQL Plan Baseline on it (serial plan). Now, every once in a while the run-time of this batch job goes through the roof and every time this happens I see that the query does not use the baseline (v$sql.sql_plan_baseline is NULL). Also, next to different PLAN_HASH_VALUEs I noticed different OPTIMIZER_ENV_HASH_VALUEs. Checking the session settings V$SES_OPTIMIZER_ENV showed that “parallel_query_forced_dop” was set to “default”, which means “ALTER SESSION FORCE PARALLEL QUERY” was run previously on that session.

But why is it not deterministic? The tool that runs all the batch jobs uses a connection pool, some job steps force parallel and some don’t. We haven’t been able to technically confirm this but everything points towards that this session property is not cleared to default when a connection gets reused. So, sometimes this batch job just gets unlucky by the session it gets from the connection pool.

The solution: Adding second SQL Plan Baseline. This plan is a parallel plan with the same structure as the original serial plan. Now, either one of the plan baselines (serial or parallel plan) is being used depending on the session configuration.

Footnote:

When you use “FORCE PARALLEL QUERY” you might get a serial plan. You’ll see this in the plan notes: “automatic DOP: Computed Degree of Parallelism is 1”. Obviously, this would change some of above results.